One can lie—or at least misdirect—by telling only truths.

Suppose Don shares news of every violent crime committed by immigrants (while ignoring those committed by native-born citizens, and never sharing evidence of immigrants positively contributing to society). He spreads the false impression that immigrants are dangerous and do more harm than good. Since this isn’t true, and promulgates harmful xenophobic sentiments, I expect most academics in my social circles would judge Don very negatively, as both (i) morally bad, and (ii) intellectually dishonest.

It would not be a convincing defense for Don to say, “But everything I said is literally true!” What matters is that he led his audience to believe much more important falsehoods.1

I think broadly similar epistemic vices (not always deliberate) are much more common than is generally appreciated. Identifying them requires judgment calls about which truths are most important. These judgment calls are contestable. But I think they’re worth making. (Others can always let us know if they think our diagnoses are wrong, which could help to refocus debate on the real crux of the disagreement.) People don’t generally think enough about moral prioritization, so encouraging more importance-based criticism could provide helpful correctives against common carelessness and misfocus.

Moral misdirection thus strikes me as an important and illuminating concept.2 In this post, I’ll first take an initial stab at clarifying the idea, and then suggest a few examples. (Free free to add more in the comments!)

Defining Moral Misdirection

Moral misdirection involves leading people morally astray, specifically by manipulating their attention. So explicitly asserting a sincerely believed falsehood doesn’t qualify. But misdirection needn’t be entirely deliberate, either. Misdirection could be subconscious (perhaps a result of motivated reasoning, or implicit biases), or even entirely inadvertent—merely negligent, say. In fact, deliberately implicating something known to be false won’t necessarily count as “misdirection”. Innocent examples include simplification, or pedagogical “lies-to-children”. If a simplification helps one’s audience to better understand what’s important, there’s nothing dishonest about that—even if it predictably results in some technically false beliefs.

Taking all that into account, here’s my first stab at a conceptual analysis:

Moral misdirection, as it interests me here, is a speech act that functionally operates to distract one’s audience from more important moral truths. It thus predictably reduces the importance-weighted accuracy of the audience’s moral beliefs.

Explanation: Someone who is sincerely, wholeheartedly in error may have the objective effect of leading their audiences astray, but their assertions don’t functionally operate towards that end, merely in virtue of happening to be false.3 Their good-faith erroneous assertions may rather truly aim to improve the importance-weighted accuracy of their audience’s beliefs, and simply fail. Mistakes happen.

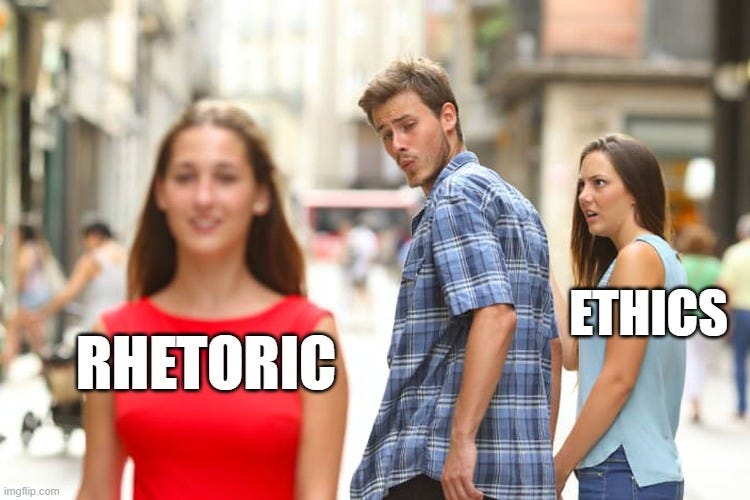

At the other extreme, sometimes people deliberately mislead (about important matters) while technically avoiding any explicit assertion of falsehoods. These bad-faith actors maintain a kind of “plausible deniability”—a sheen of superficial intellectual respectability—while deliberately poisoning the epistemic commons. I find this deeply vicious.

But very often, I believe, people are negligent communicators. They just aren’t thinking sufficiently carefully or explicitly about what’s important in the dispute at hand. They may have other (perhaps subconscious) goals that they implicitly prioritize: making “their side” look good, and the “other side” look bad. When they communicate in ways that promote these others goals at predictable cost to importance-weighted accuracy, they are engaging in moral misdirection—whether they realize it or not.

Significance: I think that moral misdirection, so understood, is a great force for ill in the world: one of the major barriers to intellectual and moral progress. It is a vice that even many otherwise “good” people routinely engage in. Its avoidance may be the most important component of intellectual integrity. It’s disheartening to consider how rare this form of intellectual integrity seems to be, even amongst intellectuals (in part because attention to the question of what is truly important is so rare). By drawing explicit attention to it, I hope to make it more common.

Three Examples

(1) Anti-Woke Culture Warriors

In a large, politically polarized country, you’ll find plenty of bad behavior spanning the political spectrum. So you can probably think of some instances of “wokeness run amok”. (If you wanted to, you could probably find a new example every week.) But as with Don the xenophobe, if you draw attention to all and only misbehavior from one specific group, you can easily exaggerate the threat they pose: creating the (mis)impression that wokeness is a grave threat to civilized society and should be our top political priority (providing sufficient reason to vote Republican, say).

As always, if someone is willing to explicitly argue for this conclusion—that wokeness really is the #1 problem in American society today—then I’ll give them points for intellectual honesty. (I’ll just disagree on the substance.)4 But I think most recognize that this claim isn’t really defensible. And if one grants that Democrats are better on the more important issues (not all would grant this, of course), then it would constitute moral misdirection for one to engage in anti-woke culture warring without stressing the far graver threats from MAGA culture. Even if some anti-woke were 100% correct about every particular dispute they draw attention to, it matters how important these particulars are compared to competing issues of concern.

Similar observations apply to many political disputes. Politics is absolutely full of moral misdirection. Like all intellectual vices, we find it easier to recognize when the “other side” is guilty of it. But it’s worth being aware of more generally. I think that academics have an especially strong obligation to communicate with intellectual honesty,5 even if dishonesty may (lamentably) sometimes be justified for politicians.6

(2) Media Misdirection: “But Her Emails!”

An especially important form of moral misdirection comes from misplaced media attention. In an ideal world, the prominence of an issue in the news media would highly correlate with its objective moral importance. Real-world journalistic practices notoriously fall far short of this ideal.

Election coverage is obviously especially high stakes here, and it’s a perennial complaint that the media does not sufficiently focus on “the real issues” of importance: what practical difference it would make to have one candidate elected rather than the other. The media’s treatment of Hilary Clinton’s email cybersecurity as the #1 issue in the 2016 election was a paradigmatic example of moral misdirection. (No one could seriously believe that this was what an undecided voter’s decision rationally ought to turn on.)

A general lesson we can take from this is that scandals tend to absorb our attention in ways that are vastly disproportionate to their objective importance. (We would probably be better-off, epistemically, were we to completely ignore them.) Politicians exploit this, whipping up putative scandals to make the other side look bad. Media coverage of them would be much more responsible if they foregrounded analysis of how, if at all, any given “scandal” should change our expectations about how the candidate would govern.

(3) Anti-Vax scaremongering

Here’s another clear example of moral misdirection: highlighting the “risks” of vaccines, while ignoring or downplaying the far greater risks from remaining unvaccinated.

For a subtler (and hence more philosophically interesting) variation on the case: Consider how, at the peak of the pandemic, with limited vaccines available, western governments suspended access to some COVID vaccines (AstraZeneca in Europe, Johnson & Johnson in the US) due to uncertain risks of side-effects.

As I argued in my 2022 paper, ‘Pandemic Ethics and Status Quo Risk’, the suspensions communicated a kind of moral misinformation:7

Public institutions ought not to engage in strategic deception of the public. The idea that vaccine risks outweigh (either empirically or normatively) the risks of being unvaccinated during the pandemic is an instance of public health misinformation that is troublingly prevalent in our society. When public health institutions implement alarmist vaccine suspensions or other forms of vaccine obstructionism on strategic grounds, this communicates and reinforces the false message that the vaccine risks warrant such a response. Rather than trying to manipulate the public by pandering to unwarranted fears, public institutions have an obligation to communicate accurate information and promote the policies that are warranted in light of that information.

The most important thing for anyone to know during the pandemic was that they would be better off vaccinated ASAP. Any message that undermined this most important truth thus constituted (inadvertent) moral misdirection. To avoid this charge, public communication around the risks and side-effects of vaccines should always have been accompanied by the reminder that the risks and side-effects of getting COVID while unvaccinated were far more severe. When public health agencies instead engaged in alarmist vaccine suspensions, this was both (i) harmful, and (ii) intellectually dishonest. It’s no excuse that what they said about the risks and uncertainty was true. They predictably led their audience to believe much more important falsehoods.

It’s reasonable for public health agencies to want to insulate tried-and-true vaccines from the reputational risks of experimental vaccines (due to irresponsible media alarmism). But I think they should find a better way to do this. (One option: make clear that they do not vouch for the safety of these vaccines the way that they do for others. Downgrade them to “experimental” status. But allow access, and further communicate that many individuals may find, in consultation with their doctors, that the vaccine remains a good bet for them given our current evidence—despite the uncertainty—because COVID most likely posed a greater risk.)

Misleading Appeals to Complexity

“X is more complex than you’d realize from proponents’ public messaging,” is a message that academics are very open to (we love complexity!). But it’s also a message that can very easily slide into misdirection, as becomes obvious when you plug ‘vaccine safety’ in place of ‘X’.

To repeat my central claims:

Honest communication requires taking care not to mislead your audience. Honest public communication requires taking care not to mislead general audiences. True claims can still (very predictably) mislead.

In particular, over-emphasizing the “uncertainties” of overall good things can easily prove misleading to general audiences. (It’s uncertain whether any given immigrant will turn out to be a criminal—or to be the next Steve Jobs—but it would clearly constitute moral misdirection to try to make the “risk” of criminality more salient, as nativist politicians too often do.) Public communicators should appreciate the risks they run—not just morally, but epistemically—and take appropriate care in how they communicate about high-stakes topics. Remember: if you mislead your audience into believing important falsehoods, that is both (i) morally bad, and (ii) dishonest. The higher the stakes, the worse it is to commit this moral-epistemic vice.

How to Criticize Good Things Responsibly

I think it’s almost always possible to find a responsible way to express your beliefs. And it’s usually worth doing so: even Good Things can be further improved, after all. (Or you might learn that your beliefs are false, and update accordingly.)

To responsibly criticize a (possibly) Good Thing, a good first step is to work out (i) what its proponents take to be the most important truth, and (ii) whether you agree on that point or not.

Either way, you should be honest and explicit about your verdict. If you think that proponents’ “most important truth” is either unimportant or false, you should explicitly explain why. That would be the most fundamental and informative criticism you could offer to their view. (I would love for critics of my views to attempt this!)

If you agree that your target is correct about the most important truth in the context at hand, then in a public-facing article you should probably start off by acknowledging this. And end by reinforcing it. Generally try not to mislead your audience into thinking that the important truth is false. After first doing no epistemic harm, in the middle you can pursue your remaining disagreements.8 With any luck, everyone will emerge from the discussion with overall more accurate (importance-weighted) beliefs.

For an important example of this phenomenon, see my follow-up post, Anti-Philanthropic Misdirection.

As I was finishing up this post, I saw that Neil Levy & Keith Raymond Harris offer a similar example of “truthful misinformation” on the Practical Ethics blog. They’re particularly interested in communication that induces “false beliefs about a group”, and don’t make the general link to importance that I focus on in this post.

Huge thanks to Helen for many related discussions over the years that have no doubt shaped my thoughts—and for suggestions and feedback on an earlier draft of this post.

A tricky case: what if they misdirect as a result of sincerely but falsely believing that what they’re drawing our attention to is really more important than what they’re distracting us from? I’m not sure how best to extend the concept to this case. (Maybe it comes down to whether their false belief about importance is reasonable or not?) Either way, the main claim I want to make about this sort of case is that we would make more dialectical progress by foregrounding the background disagreement about importance.

I might be more sympathetic to a more limited claim, e.g. that excessive wokeness is one of the worst cultural tendencies on university campuses. (I don’t have a firm view on the matter, but that at least sounds like a live possibility—I wouldn’t be shocked if it turned out to be true.) But I don’t think campus culture is the most important political issue in the world. And I certainly don’t trust Republican politicians to be principled defenders of academic freedom!

It’s obviously valuable for society to have truth-seeking institutions and apolitical “experts” who can be trusted to communicate accurate information about their areas of expertise. When academics behave like political hacks for short-term political gain, they are undermining one of the most valuable social institutions that we have. As I previously put it: “Those on the left who treat academic research as just another political arena for the powerful to enforce their opinions as orthodoxy are making DeSantis’ case for him—why shouldn’t a political arena be under political control? The only principled grounds to resist this, I’d think, is to insist that academic inquiry isn’t just politics by another means.”

I find this really sad, but I assume an intellectually honest politician would (like carbon taxes) be a dismal political failure.

has convinced me of the virtues of political pandering. But that’s very much a role-specific virtue. Good politicians should pander so that they’re able to get the democratic support needed to do go things, given the realities of the actually-existing electorate and the fact that their competition will otherwise win and do bad things. As a consequence, no intelligent person should believe what politicians say. But, as per the previous note, it’s really important that people in many other professions (e.g. academics) be more trustworthy!I also argued that killing innocent people (by blocking their access to life-saving vaccines) is not an acceptable means to placating the irrationally vaccine-hesitant. (I’m a bit surprised that more non-consequentialists weren’t with me on this one!)

Helen pointed me to this NPR article on the “perils of intense meditation” as a possible exemplar. They highlight in their intro that “Meditation and mindfulness have many known health benefits,” and conclude by noting that “the podcast isn’t about the people for whom this works.... The purpose is to scrutinize harm that is being done to people and to question why isn’t the organization itself doing more to prevent that harm.” This seems perfectly reasonable, and the framing helps to reduce the risk of misleading their audience.

"Moral misdirection, as it interests me here, is a speech act that functionally operates to distract one’s audience from more important moral truths. It thus predictably reduces the importance-weighted accuracy of the audience’s moral beliefs."

At face value, this implies that it is virtually impossible to deliberately engage in moral misdirection, since almost no one sets out to knowingly reduce the accuracy of others moral beliefs- Don for example thinks he is increasing the accuracy of his audiences moral beliefs.

"It’s disheartening to consider how rare this form of intellectual integrity seems to be, even amongst intellectuals (in part because attention to the question of what is truly important is so rare). By drawing explicit attention to it, I hope to make it more common."

The complexity here is that none of us are anyone's sole interlocutor. Don could say, for example "sure, I only tell people about the crimes immigrants commit. That's fine, I'm just like a prosecutor in a trial. Immigrants have plenty of defence lawyers to tell their side of the story." Perhaps slightly more plausibly, consider someone who says "look, obviously wokeness isn't the worst thing in America, but someone has to tell the story of how fucking annoying it is- that's my job. I don't claim to be the font of all wisdom, I'm just pushing a particular angle that I think has merit". I agree that a lot of these broken records on wokeness are very irritating, but capturing exactly what they're doing wrong is hard, given that plausibly no individual has an obligation to individually be fair and balanced guide to the world.

Thanks for this very resonant piece.

I wonder if it's important to specify a norm against moral misdirection that doesn't leave listeners off the hook for their own epistemic negligence.

I might be in a context where, for example, if I say that Trump's criminal sentencing reform bill was good, it will be taken as a sign that I endorse Trump, that I will vote for Trump, that Trump was a good president, etc., etc. when all of those things are in fact untrue. But it seems to me that there could be cases where I would be under no obligation to correct this misdirection, even if it would be a good thing for me to do. Intuitively, it feels to me that a theory of moral misdirection needs to account for the fact that sometimes it is better to hold listeners liable for their misunderstandings than the speakers who have 'misdirected' them.