I like candy. And I doubt I could bring myself to push someone in front of a train, even to save five other lives. The two may be more closely connected than it seems.

Nye, Plunkett & Ku’s ‘Non-Consequentialism Demystified' is a fantastic paper. As summarized in the abstract:

A central theoretical motivation for consequentialism is that it appears clear that there are practical reasons to promote good outcomes, but mysterious why we should care about non-consequentialist moral considerations or how they could be genuine reasons to act. In this paper we argue that this theoretical motivation is mistaken, and that because many arguments for consequentialism rely upon it, the mistake substantially weakens the overall case for consequentialism. We argue that there is indeed a theoretical connection between good states and reasons to act, because good states are those it is fitting to desire and there is a conceptual connection between the fittingness of a motive and reasons to perform the acts it motivates. But while some of our motives are directed at states, others are directed at acts themselves. We contend that just as the fittingness of desires for states generates reasons to promote the good, the fittingness of these act-directed motives generates reasons to do other things…

Their key distinction between act-directed and state-directed motives is illustrated by the difference between “wanting to exercise now vs. wanting the world to be such that one exercises now.” (p. 8) Intuitively, it makes a lot of sense for these to come apart! (Some people seem to love exercise—but alas, not I.) Exercise seems positively unappealing; I’d much sooner eat candy. Even so, I may well want the world to be such that I eat less candy and exercise more instead. I’d certainly be healthier that way; maybe I’d even be happier overall. So I get how these two kinds of motivation can come apart.

Where I depart from these authors1 is that I take the appropriate state-directed attitude to properly settle what I ought to do (which is not to say that it settles what I’ll actually do, of course; I’m as weak-willed as anyone). If it’s really true that I ought to prefer the world in which I exercise more, then I ought to exercise more. The action’s lack of intrinsic appeal is normatively irrelevant. It may explain, psychologically, why I don’t actually exercise more; but it doesn’t do anything to really justify my weak-willed inaction. After all, the act-directed motivation is narrowly based on the intrinsic appeal of the action, while the state-directed motivation takes everything into account (including the act’s effects on distant and less salient interests, such as my future health).

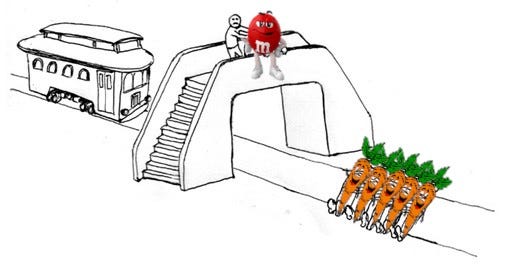

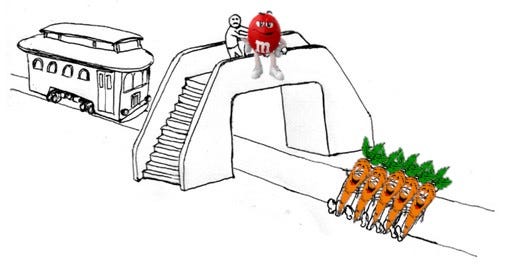

I think the same model may be fruitfully applied to deontic constraints. Killing people is (to any sane, decent person) deeply unappealing, to say the least. So it makes perfect sense to want not to kill the one in a trolley case, even if more lives would be saved by doing so. As in the case of exercise, these narrowly act-directed motivations are (in principle) apt to be overridden precisely because they tend not to be responsive to a sufficiently broad range of important considerations. We should not be surprised that extrinsically justified actions may be unappealing. That is, when its justification stems from beyond the narrow confines of the act itself, the agent may need to steel their will in order to bring themselves to do what they have most overall reason to do.

This suggests an interesting debunking explanation of the intuitive appeal of deontology. Consequentialism is unappealing in much the same way that exercise or eating your vegetables is unappealing.2 It requires you to take into account less salient interests and reasons, at the cost of more salient ones. As biased, limited agents, we naturally find this to be unpleasant. But as we teach our kids, they really should eat their vegetables even so.3

For more detail, see sec. 3.2.2 (Act-Directed vs State-Directed Preferences) of my draft paper, ‘Preference and Prevention: A New Paradox of Deontology’. (Comments welcome!)

Not exactly the same way, of course. We aren’t tempted to judge it immoral to swim through sewage to save someone’s life, unappealing though the act might be. It makes an important difference that the reasons not to kill are moral reasons, and very weighty ones at that. My point is just that even when there are even weightier moral reasons to kill the one, we still shouldn’t expect act-directed motivations to support it. But we shouldn’t take this psychological limitation to settle the moral question, because it is based on a blatantly biased and blinkered view of the reasons and interests at stake.

I should probably stress that this is a purely “in principle” point. In practice, of course, it doesn’t seem a good idea to teach anyone to push others off bridges! Commonsense moral rules are perfectly supportable on utilitarian grounds. The relevant claim is rather that constraints are not “first principles”, or normatively fundamental; they’re justified just insofar as they’re socially beneficial. So it’s an acceptable—even appropriate—implication of utilitarianism that there are hypothetical situations in which agents “should” violate rights, even though of course all real-world agents should be extremely averse to doing so, and perhaps even internalize rules that would prevent them from ever doing so.

One way of looking at the trolley problem is, what would happen if the fat man and the 5 hostages tied to the rails went behind the veil of ignorance and voted on which strategy to take? Obviously, in the problem as stipulated, they would unanimously choose for someone to push the fat man onto the rails. Deontologists are preferring a higher chance of getting killed to a lower one. Duh!

But this takes the situation as stipulated, which makes unrealistic assumptions about the certainty of our knowledge at the moment of choice. If the probability of success of the strategy of pushing the fat man is zero instead of one, the vote will go unanimously in the other direction. The question then becomes, are we certain enough of success to make the risk pay off? Deontologists would say that we have a word for those cases: “emergency.” Obey the speed limit, except when getting to the hospital one minute sooner might save a life.

People are pretty terrible at making such calculations with plenty of preparation time, even more so on the fly. The real trolley problem is, how on earth did anyone ever get into such a scenario? Why is trolley safety so lacking that unprepared random persons must make life and death decisions? If anyone is ever in a situation truly analogous to the trolley problem, someone has already made serious errors in judgement. Once encountered, a decision indeed must be made. But in the ideal we would never encounter them.

Or would we? Hospital administrators effectively make life and death decisions when they allocate their budgets. Unfortunate children might be saved if they spent the whole budget on super advanced pediatric facilities. They don’t have to personally murder any geriatric patients.

The problem with both Rawls and the trolley problem is that they imply that the calculus would be unambiguous if only we shed our biases and partiality. Just do the math. But that assumes that we have some shared value or commitment that should dominate, and it is only selfishness that prevents us from reaching consensus. Why should the lives of five captives matter to the abstract being behind the veil of ignorance? Once it has shed its biases and partialities, does it retain its will to live? Probably. (That's one thing we all have in common, with the exception of failed suicides.) We don’t just desire to live, we desire to live in a way that is worthwhile, surrounded by those we care about, who are also living in a way that is worthwhile. But does that suffice to give us terms for our calculations? Or must we use rules and heuristics to overcome our ignorance, refining them as our knowledge changes? Is there still room for rational disagreement behind the veil?

Good post! I also think deontologists are like small children who do not eat their vegetables!